This is the talk I gave at the GoManila Meetup held on September 23, 2019. You can view the slides here and the video recording here (starts at the 53-minute mark).

We used to throw code over the wall, only ensuring it works in our development environment. Production was a dangerous place that specialized people known as Operators/Ops had to set up, manage and keep online. The move of a lot of devices into software as code and the cloud with APIs and abstractions like Docker and Kubernetes has given us a world where the programmer can go from development to production all by themselves. This is what you might call the “DevOps” movement.

“DevOps is the combination of cultural philosophies, practices, and tools that increases an organization’s ability to deliver applications and services at high velocity…” - Amazon.com

This statement can be simplified as:

"DevOps increases an organization’s ability to deliver"

We all want to have the ability to deploy in faster iterations, to test our code quicker, to get the minimum viable product out to the market to beat the competitors, to receive feedback from customers. We want to deliver features of an ever-increasing scope, with large engineering teams making thousands of changes in production daily. Having a DevOps culture is a differentiator in today’s market, hopefully, it will be the standard of tomorrow.

Today though the landscape of tools and solutions to enable this culture looks like this:

Too many choices and each of these have their subset of tools and extensions. You have to be mindful of the rabbit hole that you descend.

The Problem

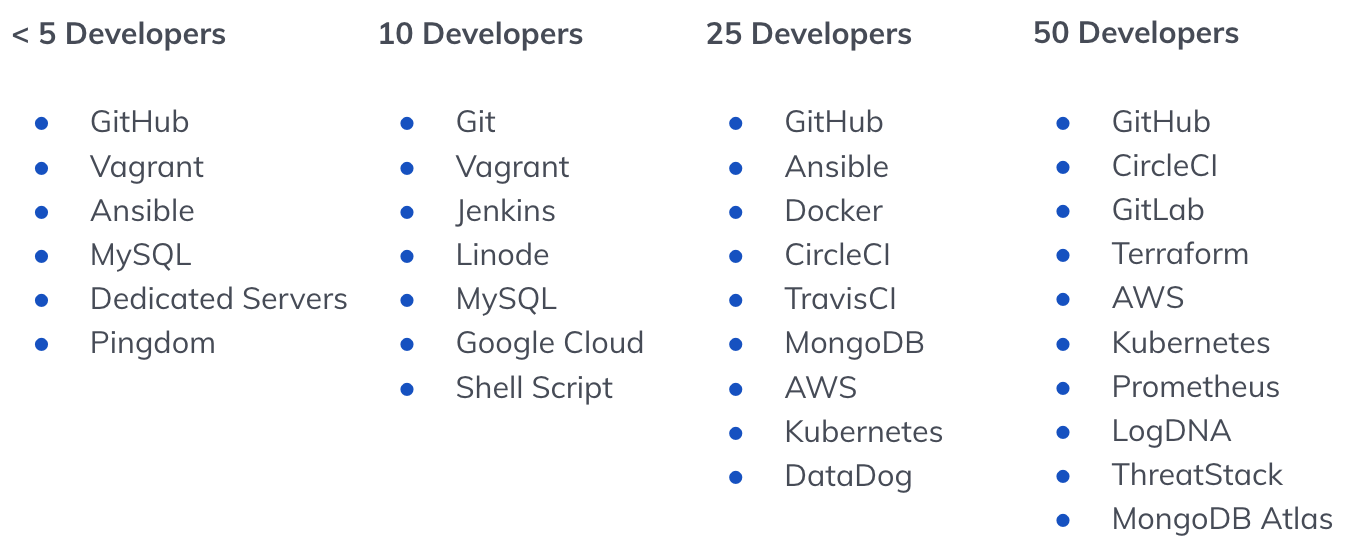

The truth is every company has to make its own choice of tools and how to use them. In my experience the larger the organization, the more tools and the more complex the processes are.

Smaller organizations can make do with ad-hoc tools and have fewer requirements to get to Production. The larger the organization grows, the more controls are put in place and the spread of tools grows. You get strict requirements and processes, especially in certain industries with regulations or if you deal with enterprise customers.

Having every developer learn these workflows and tools and constantly stay up to date with the changes is a challenge in itself. This is why enterprises prefer to purchase a vendor solution or where a product like GitLab is rightly positioned. Image how long it takes 1 person to learn how to use all the above? Unless they work in Infrastructure such knowledge does not directly benefit their daily work.

Some of these problems we experienced were:

- Tools are hard to learn and they evolve as technology and the company change.

- Standardization is important as the number of engineers grow, especially for starting new projects.

- On-boarding new engineers are costly and the faster you can get them deploying reliably the better.

- Infrastructure code should live right next to the application or else you will create silos again.

- Secret management is usually an afterthought and hard problem.

- Permissions and controls get put once you reach a certain size or to enable compliance with regulations, this can slow you down if you don’t abstract it away.

- Observing the code in Production and knowing if it works a whole different set of skills.

Solution

A solution is to codify all these tools and processes. Saying it is simpler than doing it but this is what Continuous Integration and Continuous Delivery is all about. You can use your favorite CI tools just use GitLab and get most of the work done in YAML. This is only solving half the problem. Doing complex logic in YAML or Bash is not the best for portability or long-lived code.

You want to be able to create a way so that these processes and tools can be used in any environment whether on local, CI, or Production. This is why we create internal tools, to solve problems in a repeatable way that can scale. For developers the right tool most of the time is a command-line tool and there is no better language for CLI tools than Go!

Shippy

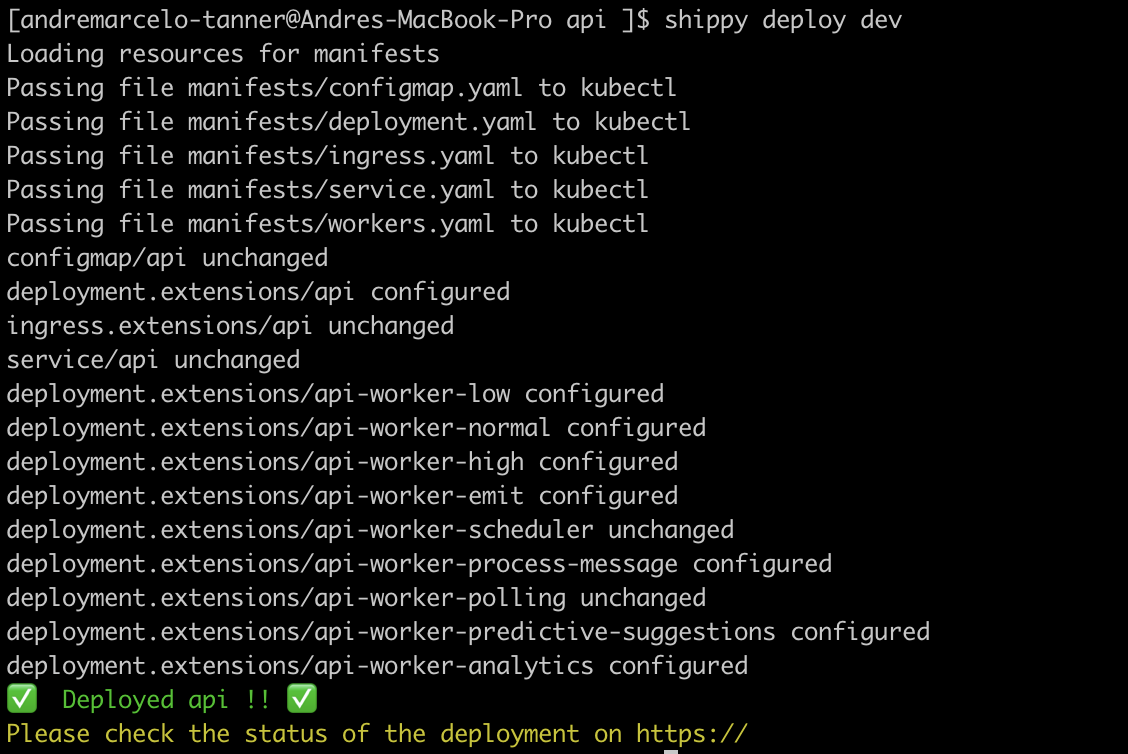

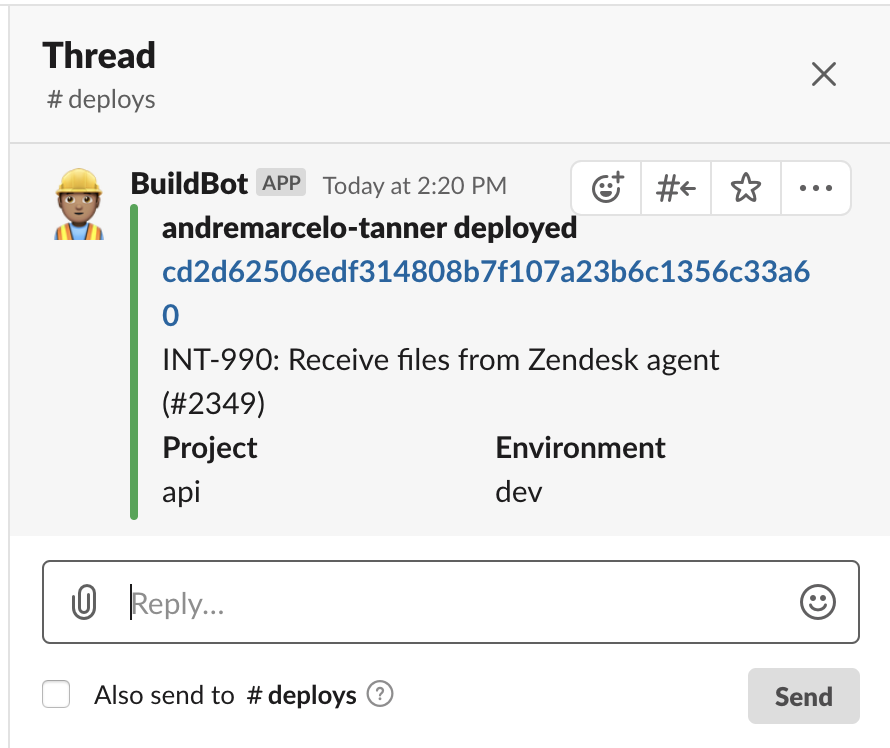

At Ada, we solved this by creating Shippy, a CLI tool created using Go. An all in one tool that greatly enables our developers’ productivity. It contains very specific workflows for our internal processes and allows us to deploy to Production in one single command:

It looks simple, but it removes the overhead and cognitive load from our developers. Now they just have to learn how to use a few commands rather than the plethora of tools out there.

In a few commands we encapsulated:

- AWS Elastic Container Registry Login

- Docker Build & Push

- Kubernetes Deployment

- Kontemplate, a YAML templating tool for Kubernetes because.

- Secret management via AWS Key Management Service and EJSON-KMS

- Change management processes like making sure the wrong code can not get deployed and that it has approval.

All of this you might do already manually but abstracting it away takes away so much of the pain of everyday development in a growing company.

Command Line Tools In Go

Why did we build this in Go? There is a lot of great things in Go, but the main advantages of using it for command-line tools are:

- It compiles to Linux, macOS, and Windows very easily, You hardly have to think about this as a developer.

- The binary is relatively small and runs very fast, no runtime environment is required so you can run this on any system you have as long as you have the binary.

- Many easy to use packages, there is the standard library from Go as well as many great third party packages that do most of the heavy lifting for you allowing you to focus on what is specific to your application.

- Many DevOps and Cloud tools are built in Go or have Go SDKs available. Since Docker was released, almost all developer tools have also used Go and thus are very easy to use in your programs. Other examples of these are Kubernetes and Terraform. There are also SDKs for AWS, Google Cloud and Azure.

Go Modules

The Go modules are the system by which you can use libraries from other applications. You can use the same modules that Kubernetes uses to make your code just as awesome.

Here are some modules I would recommend when writing CLI programs:

- Cobra - for your commands and parameters, extremely powerful.

- Viper - for all your configuration needs like YAML, JSON, read parameters from environment variables and more. Hail Cobra!

- AWS SDK - It’s huge but it gives you the power of the AWS API into your application.

- Docker - If you use docker, you can do everything it can by using the docker packages. Alternatively, you can swap out another OCI image builder like Kaniko or Buildah.

The DevOps Method

Creating a CLI tool is not a simple undertaking, not every process you do should automatically be transformed into a CLI command. There should be a process by which you turn the greatest pain into the simplest abstraction:

Do it once - the first time you take on a process, you will discover the difficulties of how to solve your problem and what tools you would need.

Do it twice - the next time you tackle this problem you will be better adapted as you had already gone through the pain of figuring out your solution, you should be able to move faster at solving the problem.

Document it - if you need to do anything more than twice you better document your process so that you can act on it in a repeatable manner and so that anyone else in your team can use the same process without your help. Good documentation is the foundation for any great solution.

Script it - depending on how complex the problem is, you can convert your documentation into a script to automate away most of the manual toil. Whether this is a bash, ruby or python script, it will allow you to use programming concepts in solving your problem and this way you can find the corner cases of what parts of your process are hard to solve and what are simple to automate. I like to use a lot of pseudocode or real code in my documentation so this part of mostly a copy-paste for me.

How many people need this solution? - If only those with domain knowledge will be using this process you may not need another abstraction to improve your process. If it’s just you and your team, your documentation and scripts may suffice and get you a long way. Overoptimizing and doing it too early can make you feel like you making progress but in reality, you are wasting time doing this with little impact.

Get feedback - if you will not be the only user of this solution, solicit feedback from the other potential users of what similar problems they are having and share your solution to see how they can use it and even let them use it so you can observe how other people would use your process, tools, and solutions. You might be surprised how everyone does not see things your way.

Iterate - on your process, documentation, and scripts from the feedback. What can you improve? What can you remove? The best process is no process. Is this needed?

Now convert it to a custom tool - only after all the previous steps should you put in the effort to create custom tooling in Go or another language. By now you should have identified the pain points of your users in your organization, seen where your process has holes and optimized it to the point where it is ready to be shared with everyone. This is the point where you know how much of an impact your tooling can make and the benefits vs the cost of spending time to develop it will be greater.

Document, Iterate, Rinse, Repeat - Now that you have a custom tool, start from the beginning and talk to your users, improve your tool and process and maybe eventually even get rid of it, but at least you know you are solving a problem that matters.

Learnings

Our internal tool Shippy was created long before I joined Ada but over the years of it being used and iterating on it we have learned some lessons:

- Keep it modular - this is an idiom in Go but when you are hacking on an internal tool you may not put as much polish into it as a production application that your customers see. This can lead to tech debt that makes it harder to upgrade it later on. Have a good base to start your application on and a lot of this can be done by using great 3rd party libraries.

- Read the code of other CLI tools out there - You can learn a lot by seeing how Docker or Kubernetes structure their commands and internal packages. Go code is so easy to read because it’s all formatted the same way so don’t be afraid to peek into the source code of the bigger companies out there, you could learn a thing or two from them.

- Expect to swap out the underlying tools and libraries - What worked for you at 10 people may not work for you at 50, 100, or 1000. Your processes may change even, so with a custom tool you can keep the abstraction layer of commands the same but swap out the code underneath with a different implementation when it becomes necessary and no one will know. This is one of the great things about internal tools is if the company changes you can change everything with it, it’s not like open source tools where you have to support many different use cases or keep backward compatibility all the time.

- Make it easy to upgrade - When was the last time you downloaded the latest version of your browser? Keeping all your users on the latest version of your application can remove a lot of bug reports, and let you iterate and push your code out to all your users as soon as it’s done. Get your users used to updating their tools automatically and the version number will be a thing of the past.

What’s next?

Our tool Shippy may be the first, but not the last of or custom internal tools. What we do know is that it empowers and makes our users/developers happy and vastly more productive, and for a programmer like me, you want nothing more than for your code to have a great impact.

Do you have a custom tool or script at your organization? What problems do you run into when trying to get more people to adopt it? What other ways have you solved these problems that we might not have thought about? Let me know @kzapkzap on Twitter.